With the recent popularization of GPT-4, we believe that we are on the cusp of a new wave of innovation that will impact each and every industry globally. As long-term technology investors, the artificial intelligence (AI) revolution introduces a fresh source of new actionable investment ideas. Here is a recent interview we did with GPT-4, which also introduces in more details one of the new investments of Sycomore Sustainable Tech, MongoDB, a next-generation database.

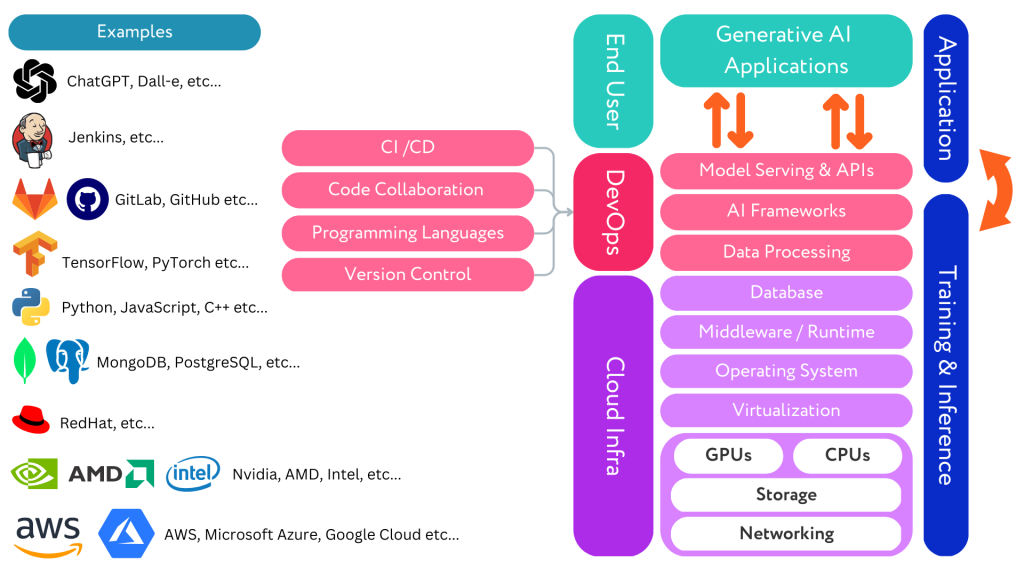

Since the beginning of the year, the Sycomore Sustainable Tech team set out to analyze some of the tangible impacts that the recent popularity of OpenAI's ChatGPT could have on our investment universe. We continue to deepen our research, however, we thought it interesting to share what GPT-4, itself, thought of the potential composition of a generative AI vendor’s technological stack. Specifically, we asked it which software and hardware companies would provide the most successful technology setup for developing a highly scalable large-language-model like GPT.

Amongst the companies that appear to be key to creating a GPT-4-like technology, our Sycomore Sustainable Tech fund is already invested in AMD, Gitlab, Microsoft, MongoDB and NVDIA. We see these companies as truly disruptive businesses whose business models are well positioned to have a real impact on the portfolio.

What is a tech stack?

A "tech stack" refers to the combination of technologies needed for the development, and implementation of different technological applications. This includes not only the programming languages employed but also the assortment of frameworks and tools that developers utilize to design, launch, manage, and operate the application.

An Interview With GPT

If we were to start a generative AI company from scratch, can you walk me through the preferred technology stack you would use, from hardware to software. Focus on companies with the highest likelihood to be selected as part of a Generative AI application.

GPT-4: Starting a generative AI company requires a well-planned tech stack that enables efficient research, development, and deployment of AI models. However, here is a suggested tech stack, starting from the hardware level:

GPU: NVIDIA GPUs (such as A100, V100, or RTX series) for efficient training and inference of deep learning models.

CPU: High-performance CPUs (e.g., Intel Xeon or AMD EPYC) for general-purpose computing and data processing.

Storage: High-capacity SSDs or HDDs for storing large datasets, model checkpoints, and generated content.

Networking: High-speed, low-latency network connections for efficient data transfer and model serving.

What about the type of cloud infrastructure?

GPT-4:

Cloud Providers: AWS, Google Cloud Platform, or Microsoft Azure for scalable compute, storage, and networking resources.

Containers: Docker for creating, deploying, and managing portable containerized applications.

Container Orchestration: Kubernetes for automating the deployment, scaling, and management of containerized applications.

Every software needs an operating system – which one makes the most sense for this use case?

GPT-4: Linux distributions (e.g., Ubuntu or CentOS) for compatibility with most AI frameworks and tools.

What about the best programming languages related to GAI technology?

GPT-4: Python for its extensive ecosystem of AI and data science libraries. Other languages (e.g., JavaScript, Java, or C++) for specific use cases, such as web development, mobile apps, or high-performance computing.

There are already some well utilized AI frameworks – which ones should we focus on?

GPT-4: TensorFlow and Keras for building, training, and deploying deep learning models. PyTorch for its flexibility and dynamic computation graph, which is particularly useful for research and experimentation. Transformers library by Hugging Face for pre-trained language models and easy integration with generative AI models.

Data is the foundational layer of this technology – can you share your thoughts on the best of breed processing and database tools a large scale AI technology should use?

GPT-4:

Data Processing: Pandas and NumPy for data manipulation and numerical computations.

Databases: Microsoft CosmosDB, MongoDB (NoSQL) and/or PostgreSQL (SQL) for storing and managing training data, metadata, and generated content.

What tools should we consider for coding, version control and developer collaboration?

GPT-4: Git for version control of code and data. GitHub or GitLab for remote repositories, collaboration, and project management.

Software these days is typically built in small batches of code, what should we use to ensure our software development efforts are efficient and deployed quickly and often.

GPT-4: Jenkins, GitLab CI/CD, or GitHub Actions for automating testing, building, and deploying AI models and applications.

Once the model is built and ready to deploy, what tools should we use?

GPT-4: TensorFlow Serving or PyTorch Serve for serving AI models in a production environment. FastAPI or Flask for building RESTful APIs to expose AI models to external applications.

As usual, GPT-4 made sure to remind us that this conversation was just a suggested tech stack for a generative AI company, and that of course it doesn’t mean it reflects its own stack. However, we still found the answers extremely enlightening as to who could benefit from this wave. Specifically, MongoDB, and therefore we decided to keep the conversation going with a few more questions.

If I was the largest and most popular to-date generative AI company, why would I pick MongoDB, and if so would I chose its Enterprise Advanced offering or the cloud version, named Atlas.

GPT-4: For the largest generative AI company in the world, MongoDB Atlas (cloud-based) is likely the more suitable option, considering the following reasons:

Scalability: As a leading AI company, the need for large-scale storage and processing capabilities is essential. MongoDB Atlas offers automated scalability, making it easy to handle extensive datasets and high throughput requirements associated with large generative AI models.

Maintenance and management: MongoDB Atlas relieves the company from managing, patching, and updating the database, which can significantly reduce operational overhead. This allows the company to focus on AI research, development, and deployment instead of database management tasks.

Integration with cloud services: A top AI company will likely use various cloud-based AI and machine learning services. MongoDB Atlas offers better integration and seamless data exchange with these services, making it a more efficient choice for collaboration with other cloud-based tools.

Global availability and latency: MongoDB Atlas provides options for optimizing network latency, such as selecting regions close to the AI workloads and using Global Clusters to distribute data across regions. This enables the AI company to deliver low-latency services to a global user base.

Flexibility and cost: MongoDB Atlas offers flexible pricing options based on usage, making it easier for the company to adapt to changing needs and budget constraints. This flexibility is advantageous for a fast-growing AI company with evolving requirements.

The choice between using MongoDB Atlas (cloud-based) and MongoDB Enterprise Advanced (on-prem) for deploying large-scale language models depends on your specific requirements, budget, and infrastructure preferences.

While it's impossible to know all the technologies employed by OpenAI for GPT, there is a high likelihood that the companies and technologies discussed during our interview are well-positioned to benefit from the growing popularity of AI tools.

The technology team at Sycomore AM continues its research to identify the various impacts that AI could have on our investment portfolio but also our society as a whole. We will soon share our findings regarding the effects that this revolution will have on the global economy. Stay tuned!

References to specific securities and their issuers are for illustrative purposes only and should not be construed as recommendations to buy or sell such securities. The opinions and estimates given are our judgment and are subject to change without notice. We believe that the information provided in this article is reliable, but it should not be considered exhaustive. Your attention is drawn to the fact that any forecast has its own limitations and that consequently no commitment is made by Sycomore AM as to the realization of these forecasts. We recommend that you inform yourself carefully before making an investment decision. Any investment in the UCITS mentioned in this article must be made on the basis of the information notice or the prospectus currently in force. These documents are available on request from Sycomore Asset Management. The fund does not offer any guarantee of return or performance and presents a risk of capital loss.